Insightful Updates

Stay informed with the latest news and trends.

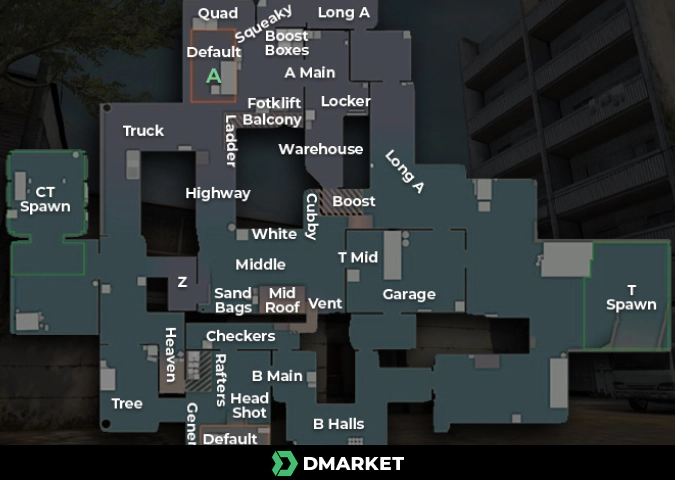

Cache Insights: Winning Strategies for Every Corner

Unlock winning strategies with Cache Insights! Explore tips and tricks for every corner of your life. Click now to transform your approach!

Understanding Cache Memory: How It Impacts Performance in Computing

Cache memory is a type of high-speed volatile memory that provides high-speed access to frequently used data and instructions, significantly improving overall system performance. Unlike primary memory (RAM), cache memory is much faster and is located closer to the CPU, allowing for quicker read and write operations. In modern computing systems, cache memory is typically divided into multiple levels, such as L1, L2, and L3 caches. Each level has different speeds and sizes, with L1 cache being the fastest but also the smallest. By storing copies of frequently accessed data, cache memory reduces the time the processor takes to access slower main memory, which is essential for keeping up with the demands of today's high-performance applications.

The impact of cache memory on computing performance cannot be overstated. When the CPU needs data, it first checks the cache, and if the data is found there—a situation known as a cache hit—the processor can bypass the slower main memory, leading to improved processing speed and efficiency. Conversely, a cache miss forces the CPU to retrieve data from the slower memory, resulting in a delay. In essence, a well-optimized cache can lead to significant enhancements in application performance, particularly in tasks that require fast data retrieval, such as gaming, video editing, and scientific simulations. Understanding how cache memory works and its role in a system can help users make informed decisions when selecting hardware or optimizing software.

Counter-Strike is a highly popular first-person shooter that has evolved over the years, with players competing in intense matches worldwide. One exciting aspect of the competitive scene is in the context of the relegation match cs2, where teams battle for their place in the rankings and strive to remain in the top tiers of competition.

Top 5 Strategies for Optimizing Your Cache Usage

When it comes to optimizing your cache usage, understanding the fundamentals is crucial. Caching is a technique that temporarily stores frequently accessed data to reduce latency and improve loading times. Here are the Top 5 Strategies for Optimizing Your Cache Usage:

- Choose the Right Caching Strategy: Depending on your application, determine whether you need memory caching, disk caching, or a combination of both. Memory caching is faster but might be limited in capacity, while disk caching offers more space but at the cost of speed.

- Set Expiry Times: Always set expiration times for cached items. This ensures that stale data is removed and that your cache remains relevant.

- Use Cache Invalidation: Implement effective cache invalidation techniques to ensure that your cache reflects the most current data. This is particularly important in dynamic applications.

- Monitor Cache Performance: Regularly analyze cache performance metrics to identify bottlenecks and adjust configurations as needed. Tools like New Relic or Datadog can be incredibly useful.

- Test and Iterate: Finally, don’t forget to iteratively test your caching strategies. What works best for one application might not suit another, so be prepared to experiment.

What Are the Common Pitfalls in Cache Management and How to Avoid Them?

Effective cache management is crucial for optimizing the performance of web applications; however, there are several common pitfalls that developers encounter. One significant issue is the misconfiguration of cache expiration settings. Failing to set appropriate expiration times can lead to stale content being served to users, which can ultimately harm user experience. Additionally, relying too heavily on manual cache clearing can introduce inconsistencies. Implementing automated cache invalidation strategies ensures that users always receive the most up-to-date content without manual intervention.

Another common pitfall in cache management is neglecting cache hit and miss ratios. A low cache hit rate indicates that the cache is not effectively serving requests, which can slow down performance. Regularly monitoring these metrics can help identify when to adjust caching strategies, such as increasing cache size or optimizing cache keys. Finally, failing to document cache policies can create confusion among team members and lead to inconsistent caching practices. Maintaining clear documentation on caching strategies will help teams avoid these problems and ensure efficient cache management across the board.